Today’s users expect a dynamic application experience, where text messages appear instantly and the real-time activities of colleagues are always visible.

For years, we relied on a third-party service to power this functionality. However, our international expansion in 2024 presented a critical challenge: data sovereignty and new regulatory requirements meant our existing provider was no longer a compliant option. This constraint became an opportunity to build our own real-time infrastructure from the ground up. By developing a custom solution based on Server-Sent Events (SSE), we not only solved for compliance but also significantly reduced our operational costs and improved message latency. In this blog, I will share how we transitioned from third-party services to an in-house SSE architecture.

About Server-Sent Events

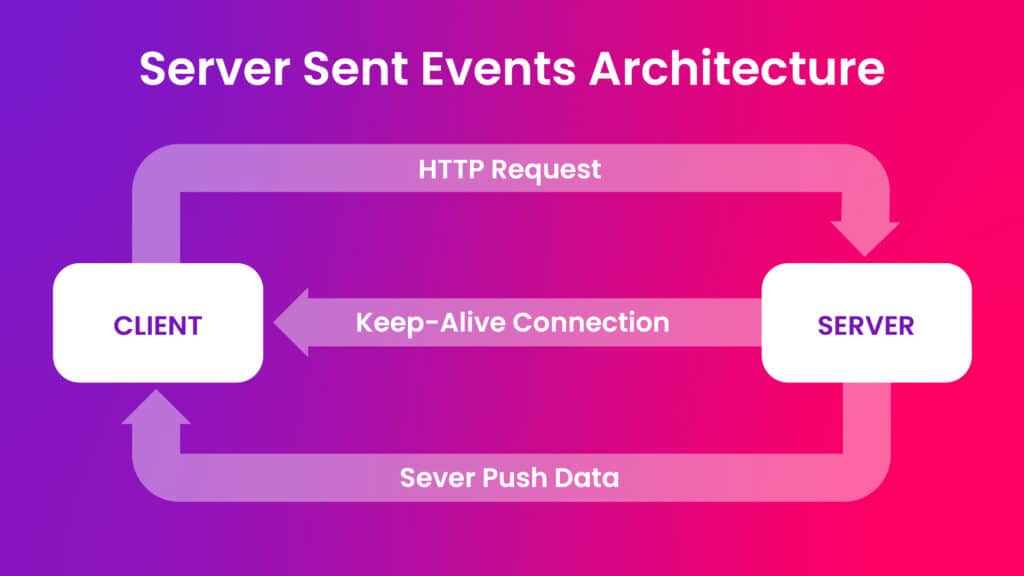

SSE offers a simple and efficient solution for creating real-time web applications where data needs to be pushed from the server to the client. Unlike WebSockets, which provide a more complex, two-way communication channel that requires a protocol upgrade from HTTP, SSE is a unidirectional technology built directly on top of standard HTTP. Early experiments showed that websockets often do not work behind corporate firewalls, as they may block the Upgrade header that WebSockets rely on.

The core of SSE lies in the EventSource API, which allows a web page to subscribe to a stream of text-based events from a server. The server, in turn, keeps the HTTP connection open and sends data to the client as it becomes available. If the connection is lost, the browser will automatically attempt to reconnect with the last known event id and resume exactly where it left off.

Event Stream format example

event: newMessage

data: {"user": "Alice", "text": "Hello!"}

id: 1692632147971-0

\n\n // Double newline separates messages

event: viewerChange

data: {"user": "Bob"}

id: 1692632147972-0

\n\n

: This is a comment that server sends periodically to keep connection alive

System Architecture

Serving SSE from a single server is straightforward, but this architecture breaks down when scaling horizontally. To support multiple, stateless gateway instances, our system introduces Redis as a central pub/sub message bus, decoupling event ingestion from client delivery.

Specifically, we selected AWS Redis Serverless because it is ideal for our spiky traffic patterns, where 50% of daily activity occurs in a 5-hour window. It seamlessly scales storage and I/O during peak hours while automatically reducing costs during off-peak times.

Publisher Data Flow and Structures

When a source event targeted for a patient channel is received, a real-time distribution process begins. Our architecture uses three key data structures in Redis:

Presence Store

A Redis Sorted Set, keyed as rtg:presence, tracks all currently active users. When a user interacts with the Real Time Gateway, their user ID is added to the set with the current timestamp as its score (ZADD).

This presence data is critical for efficiency. Before processing any new event, the system checks if there are any active users on the target channel. A user is considered online if their last activity timestamp is recent (e.g., within the last 30 minutes). If no users are present, the incoming event is discarded. This simple check is highly effective, eliminating more than 90% of unnecessary event processing and significantly reducing the load on the downstream system.

-- Update a user's last-seen timestamp

ZADD rtg:presence $CURRENT_TIMESTAMP $USER_ID

-- Check if a user is online by fetching their timestamp

-- The application logic then verifies if it's within a 30-minute window.

ZSCORE rtg:presence $USER_ID

Notification Stream

The rtg:notifications stream acts as a central, multiplexed message bus for inter-server communication. Instead of delivering events directly, it contains all recent events for all users in a single, capped stream (XADD MAXLEN). Each Real Time Gateway instance subscribes to this stream and uses it like a pub/sub topic to receive a unified flow of events.

Upon insertion, Redis generates a globally unique and monotonic event ID (e.g., 1692632147971-0). This ID, combining a timestamp with a sequence number, is crucial for clients to reliably retrieve missed messages after a disconnection.

While this architecture simplifies service discovery, it has a known trade-off: every event payload is broadcast to every gateway server, regardless where the target user is connected. This is a deliberate choice favoring architectural simplicity over minimizing network traffic between servers.

-- Add a new event to the global notification stream. -- The stream is capped at approximately 10,000 entries. XADD rtg:notifications MAXLEN ~ 10000 * data $PAYLOAD channelId $USER_ID -- Each gateway server reads events from the stream since the last ID it processed. -- This command blocks for up to 5 seconds, waiting for new events. XREAD COUNT 100 BLOCK 5000 STREAMS rtg:notifications $LAST_EVENT_ID

History Stream

To handle client reconnections gracefully, each online user has their own dedicated history stream, keyed as rtg:history:$USER_ID. This stream acts as a temporary, per-user buffer containing their most recent events. When a client reconnects and provides the last known Last-Event-ID, the gateway can query this stream (XRANGE) to send only the messages missed since the disconnection. To keep the memory footprint low, the entire stream is set to automatically expire after a period of inactivity to purge data from disconnected users.

-- Add a compressed event to a user's personal history stream and set it to expire. -- This is executed as a MULTI transaction for atomicity. XADD rtg:history:$USER_ID MAXLEN ~ $NUMBER_OF_MESSAGES * data $PAYLOAD EXPIRE rtg:history:$USER_ID $EXPIRATION_IN_SECONDS -- On reconnect, retrieve all events since the client's last known event ID. XRANGE rtg:history:$USER_ID $LAST_EVENT_ID +

Gateway Server

When a client establishes a connection, the server registers them in an in-memory subscription map, ready to forward events. The gateway server’s main role is to listen to the global rtg:notifications stream from Redis. As events arrive on this pub/sub channel, the server forwards them in real-time to subscribed clients via their open SSE connections.

To handle network interruptions, the gateway also manages reconnections. If a client reconnects and provides a Last-Event-ID, the server performs a history lookup against that user’s dedicated rtg:history:$USER_ID stream in Redis to deliver any missed messages before streaming live events from the notification stream.

While the server expects clients to connect with Content-Type: text/event-stream for a persistent SSE connection, it also includes a fallback mechanism using text/plain to support older clients or restrictive environments via long-polling.

Connection Lifecycle Management

To prevent connection imbalances during our frequent rolling updates, we programmatically terminate every SSE connection after 10-15 minutes on the server side. This forced reconnection allows our load balancer to evenly redistribute. This strategy also gives us two important benefits: it improves security by forcing regular re-authentication, and enhances observability by generating new traces with each connection.

Handling Frontend Connections with SSE

Implementing SSE in a production environment with modern security requirements reveals some of its limitations. The standard EventSource interface does not support sending custom HTTP headers, making it impossible to pass an Authorization header for authenticating client connections.

To solve this, we bypassed the native implementation in favor of the battle-tested fetch-event-source library. Adopting this library provided immediate benefits beyond just authentication. It gave us granular control over connection retry logic, allowing us to implement a more resilient reconnection strategy. Furthermore, it offered built-in parsing for the incoming data stream.

The Long-Polling Fallback: Ensuring 100% Reliability

Even with a solid SSE implementation, network realities mean that a small percentage of users (less than 1% in our case) operate behind firewalls or proxies that block long-lived, persistent connections. To guarantee message deliverability for these clients, we built a long-polling fallback mechanism.

It starts with an SSE connection and keeps an eye on it. If the SSE connection runs into trouble, like getting stuck or timing out, the system automatically starts a long-polling connection to act as a backup. Once the main SSE connection is healthy again and receiving new messages, the backup long-polling connection is closed. This ensures we always have a working connection.

Data-Driven Rollout Strategy

To ensure a seamless transition with zero disruption, we used a parallel rollout strategy. Both the legacy system and the new SSE-based architecture ran side-by-side in production for several months. We began by routing a small percentage of traffic to the new SSE channels, using feature flags to control the flow.

Throughout this period, we heavily relied on a dedicated monitoring dashboard to compare the two systems in real-time. This dashboard aggregated critical metrics, including the total number of events received by each system and the end-to-end message latency from the event source all the way to the browser. After ensuring that the new system was meeting (and often exceeding) the performance and reliability of the old one, we were ready to flip the switch and decommission the legacy system.

Results

We’re thrilled with how the new system is performing. It has fundamentally improved our real-time notification system’s performance and efficiency across the board. Here’s a quick look at the highlights:

- A Faster, Cheaper Day-to-Day: We’re now delivering 60 million events daily with messages arriving 32% faster. We also cut our server footprint by 63%, lowering our infrastructure costs on top of the savings from dropping our third-party vendor.

- Rock-Solid at Peak Load: When things get busy, the system doesn’t even flinch. It handles 1,000 requests/second at the publisher, and we’ve seen our Redis instance peak at an incredible 100,000 requests/second.

Founded in 2015, Artera is based in Santa Barbara, California and has been named a Deloitte Technology Fast 500 company (2021, 2022, 2023, 2024), and ranked on the Inc. 5000 list of fastest-growing private companies for five consecutive years. Artera is a two-time Best in KLAS winner in Patient Outreach.

For more information, visit www.artera.io.

Disclaimer: Artera’s blog posts and press releases are for informational purposes only and are not legal advice. Artera assumes no responsibility for the accuracy, completeness, or timeliness of blogs and non-legally required press releases. Claims for damages arising from decisions based on this release are expressly disclaimed, to the extent permitted by law.